|

build / github-pending (push) Failing after 1m12s

Details

build / build-api-coverage-3-9 (push) Has been skipped

Details

build / build-api-coverage-3-10 (push) Has been skipped

Details

build / build-api-coverage-3-8 (push) Has been skipped

Details

build / build-gui-bundle (push) Has been skipped

Details

build / package-api-oci (cpu-buster, api/Containerfile.cpu.buster) (push) Has been skipped

Details

build / package-api-oci (cuda-ubuntu, api/Containerfile.cuda.ubuntu) (push) Has been skipped

Details

build / package-api-oci (rocm-ubuntu, api/Containerfile.rocm.ubuntu) (push) Has been skipped

Details

build / package-gui-oci (nginx-alpine, Containerfile.nginx.alpine) (push) Has been skipped

Details

build / package-gui-oci (nginx-bullseye, Containerfile.nginx.bullseye) (push) Has been skipped

Details

build / package-gui-oci (node-alpine, Containerfile.node.alpine) (push) Has been skipped

Details

build / package-gui-oci (node-buster, Containerfile.node.buster) (push) Has been skipped

Details

build / package-api-twine (push) Has been skipped

Details

build / package-api-twine-dry (push) Has been skipped

Details

build / package-gui-npm (push) Has been skipped

Details

build / package-gui-npm-dry (push) Has been skipped

Details

build / github-failure (push) Has been skipped

Details

build / github-success (push) Has been skipped

Details

|

||

|---|---|---|

| .devcontainer | ||

| .github/workflows | ||

| .vscode | ||

| api | ||

| common | ||

| docs | ||

| exe | ||

| gui | ||

| models | ||

| outputs | ||

| run | ||

| .gitattributes | ||

| .gitignore | ||

| .gitlab-ci.yml | ||

| BENCHMARK.md | ||

| CHANGELOG.md | ||

| LICENSE | ||

| Makefile | ||

| README.md | ||

| mkdocs.yml | ||

| onnx-web.code-workspace | ||

| renovate.json | ||

README.md

onnx-web

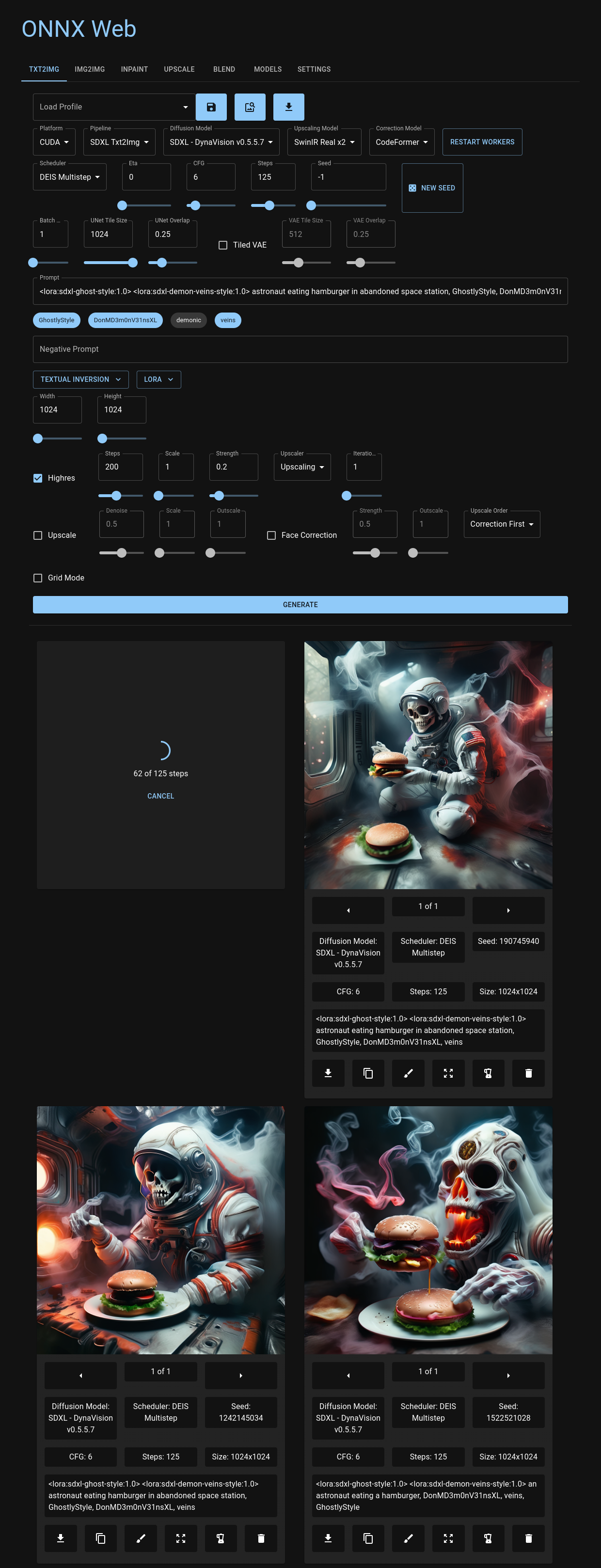

onnx-web is designed to simplify the process of running Stable Diffusion and other ONNX models so you can focus on making high quality, high resolution art. With the efficiency of hardware acceleration on both AMD and Nvidia GPUs, and offering a reliable CPU software fallback, it offers the full feature set on desktop, laptops, and multi-GPU servers with a seamless user experience.

You can navigate through the user-friendly web UI, hosted on Github Pages and accessible across all major browsers, including your go-to mobile device. Here, you have the flexibility to choose diffusion models and accelerators for each image pipeline, with easy access to the image parameters that define each modes. Whether you're uploading images or expressing your artistic touch through inpainting and outpainting, onnx-web provides an environment that's as user-friendly as it is powerful. Recent output images are neatly presented beneath the controls, serving as a handy visual reference to revisit previous parameters or remix your earlier outputs.

Dive deeper into the onnx-web experience with its API, compatible with both Linux and Windows. This RESTful interface seamlessly integrates various pipelines from the HuggingFace diffusers library, offering valuable metadata on models and accelerators, along with detailed outputs from your creative runs.

Embark on your generative art journey with onnx-web, and explore its capabilities through our detailed documentation site. Find a comprehensive getting started guide, setup guide, and user guide waiting to empower your creative endeavors!

Please check out the documentation site for more info:

Features

This is an incomplete list of new and interesting features:

- supports SDXL and SDXL Turbo

- wide variety of schedulers: DDIM, DEIS, DPM SDE, Euler Ancestral, LCM, UniPC, and more

- hardware acceleration on both AMD and Nvidia

- tested on CUDA, DirectML, and ROCm

- half-precision support for low-memory GPUs on both AMD and Nvidia

- software fallback for CPU-only systems

- web app to generate and view images

- hosted on Github Pages, from your CDN, or locally

- persists your recent images and progress as you change tabs

- queue up multiple images and retry errors

- translations available for English, French, German, and Spanish (please open an issue for more)

- supports many

diffuserspipelines- txt2img

- img2img

- inpainting, with mask drawing and upload

- panorama, for both SD v1.5 and SDXL

- upscaling, with ONNX acceleration

- add and use your own models

- blend in additional networks

- permanent and prompt-based blending

- supports LoRA and LyCORIS weights

- supports Textual Inversion concepts and embeddings

- each layer of the embeddings can be controlled and used individually

- ControlNet

- image filters for edge detection and other methods

- with ONNX acceleration

- highres mode

- runs img2img on the results of the other pipelines

- multiple iterations can produce 8k images and larger

- multi-stage and region prompts

- seamlessly combine multiple prompts in the same image

- provide prompts for different areas in the image and blend them together

- change the prompt for highres mode and refine details without recursion

- infinite prompt length

- image blending mode

- combine images from history

- upscaling and correction

- upscaling with Real ESRGAN, SwinIR, and Stable Diffusion

- face correction with CodeFormer and GFPGAN

- API server can be run remotely

- REST API can be served over HTTPS or HTTP

- background processing for all image pipelines

- polling for image status, plays nice with load balancers

- OCI containers provided

Contents

Setup

There are a few ways to run onnx-web:

- cross-platform:

- on Windows:

You only need to run the server and should not need to compile anything. The client GUI is hosted on Github Pages and is included with the Windows all-in-one bundle.

The extended setup docs have been moved to the setup guide.

Adding your own models

You can add your own models by downloading them from the HuggingFace Hub or Civitai or by converting them from local files, without making any code changes. You can also download and blend in additional networks, such as LoRAs and Textual Inversions, using tokens in the prompt.

Usage

Known errors and solutions

Please see the Known Errors section of the user guide.

Running the containers

This has been moved to the server admin guide.

Credits

Some of the conversion and pipeline code was copied or derived from code in:

Amblyopius/Stable-Diffusion-ONNX-FP16- GPL v3: https://github.com/Amblyopius/Stable-Diffusion-ONNX-FP16/blob/main/LICENSE

- https://github.com/Amblyopius/Stable-Diffusion-ONNX-FP16/blob/main/pipeline_onnx_stable_diffusion_controlnet.py

- https://github.com/Amblyopius/Stable-Diffusion-ONNX-FP16/blob/main/pipeline_onnx_stable_diffusion_instruct_pix2pix.py

d8ahazard/sd_dreambooth_extensionhuggingface/diffusersuchuusen/onnx_stable_diffusion_controlnetuchuusen/pipeline_onnx_stable_diffusion_instruct_pix2pix

Those parts have their own licenses with additional restrictions on commercial usage, modification, and redistribution. The rest of the project is provided under the MIT license, and I am working to isolate these components into a library.

There are many other good options for using Stable Diffusion with hardware acceleration, including:

- https://github.com/Amblyopius/AMD-Stable-Diffusion-ONNX-FP16

- https://github.com/azuritecoin/OnnxDiffusersUI

- https://github.com/ForserX/StableDiffusionUI

- https://github.com/pingzing/stable-diffusion-playground

- https://github.com/quickwick/stable-diffusion-win-amd-ui

Getting this set up and running on AMD would not have been possible without guides by: