|

|

||

|---|---|---|

| .devcontainer | ||

| .vscode | ||

| api | ||

| common | ||

| docs | ||

| exe | ||

| gui | ||

| models | ||

| outputs | ||

| run | ||

| .gitattributes | ||

| .gitignore | ||

| .gitlab-ci.yml | ||

| BENCHMARK.md | ||

| CHANGELOG.md | ||

| LICENSE | ||

| Makefile | ||

| README.md | ||

| onnx-web.code-workspace | ||

| renovate.json | ||

README.md

ONNX Web

onnx-web is a tool for running Stable Diffusion and other ONNX models with hardware acceleration, on both AMD and Nvidia GPUs and with a CPU software fallback.

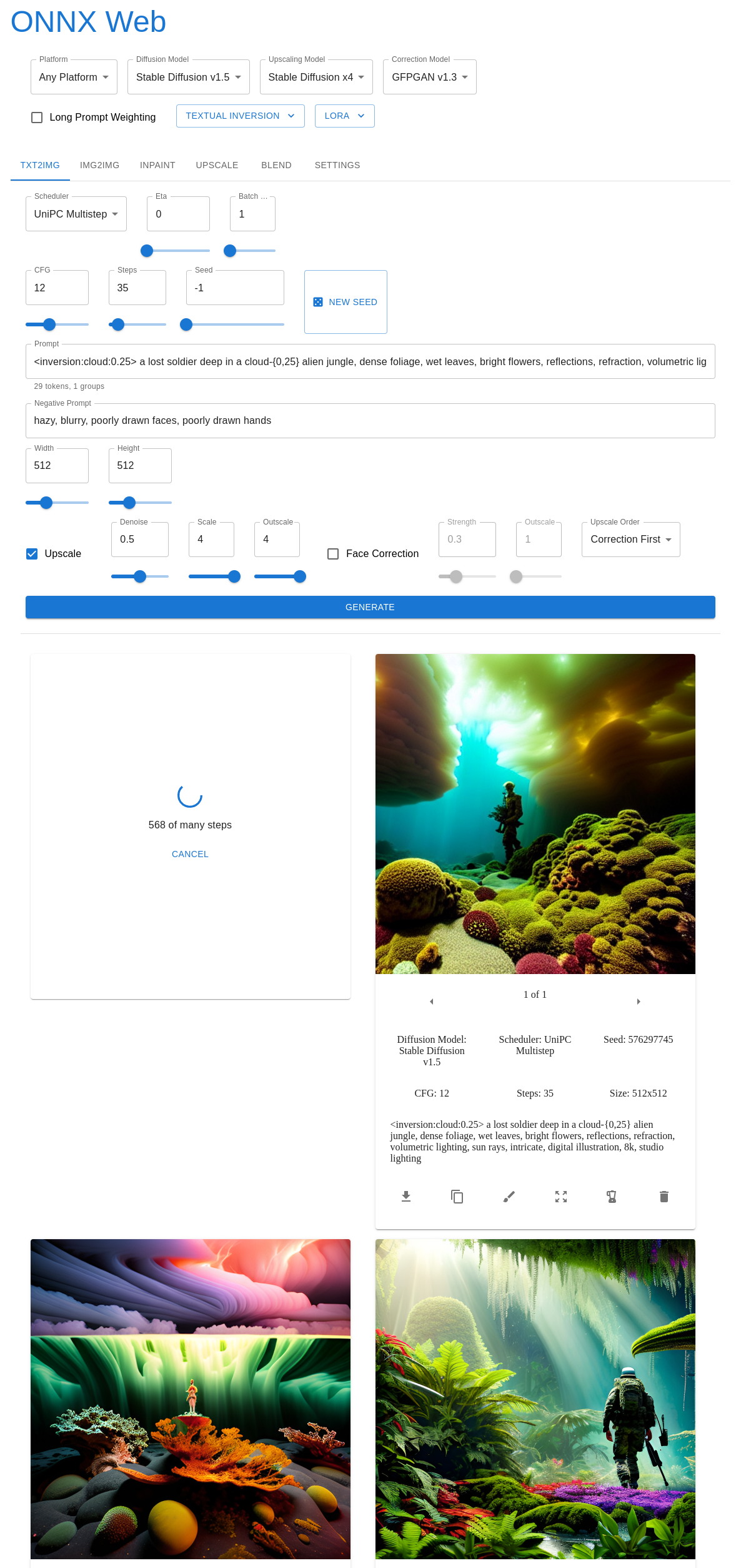

The GUI is hosted on Github Pages and runs in all major browsers, including on mobile devices. It allows you to select the model and accelerator being used for each image pipeline. Image parameters are shown for each of the major modes, and you can either upload or paint the mask for inpainting and outpainting. The last few output images are shown below the image controls, making it easy to refer back to previous parameters or save an image from earlier.

The API runs on both Linux and Windows and provides a REST API to run many of the pipelines from diffusers

, along with metadata about the available models and accelerators,

and the output of previous runs. Hardware acceleration is supported on both AMD and Nvidia for both Linux and Windows,

with a CPU fallback capable of running on laptop-class machines.

Please check out the setup guide to get started and the user guide for more details.

Features

This is an incomplete list of new and interesting features, with links to the user guide:

- hardware acceleration on both AMD and Nvidia

- tested on CUDA, DirectML, and ROCm

- half-precision support for low-memory GPUs on both AMD and Nvidia

- software fallback for CPU-only systems

- web app to generate and view images

- hosted on Github Pages, from your CDN, or locally

- persists your recent images and progress as you change tabs

- queue up multiple images and retry errors

- translations available for English, French, German, and Spanish (please open an issue for more)

- supports many

diffuserspipelines- txt2img

- img2img

- inpainting, with mask drawing and upload

- upscaling, with ONNX acceleration

- add and use your own models

- blend in additional networks

- infinite prompt length

- with long prompt weighting

- expand and control Textual Inversions per-layer

- image blending mode

- combine images from history

- upscaling and face correction

- upscaling with Real ESRGAN or Stable Diffusion

- face correction with CodeFormer or GFPGAN

- API server can be run remotely

- REST API can be served over HTTPS or HTTP

- background processing for all image pipelines

- polling for image status, plays nice with load balancers

- OCI containers provided

- for all supported hardware accelerators

- includes both the API and GUI bundle in a single container

- runs well on RunPod and other GPU container hosting services

Contents

Setup

There are a few ways to run onnx-web:

- cross-platform:

- on Windows:

You only need to run the server and should not need to compile anything. The client GUI is hosted on Github Pages and is included with the Windows all-in-one bundle.

The extended setup docs have been moved to the setup guide.

Adding your own models

You can add your own models by downloading them from the HuggingFace Hub or Civitai or by converting them from local files, without making any code changes. You can also download and blend in additional networks, such as LoRAs and Textual Inversions, using tokens in the prompt.

Usage

Known errors and solutions

Please see the Known Errors section of the user guide.

Running the containers

This has been moved to the server admin guide.

Credits

Some of the conversion code was copied or derived from code in:

- https://github.com/huggingface/diffusers/blob/main/scripts/convert_stable_diffusion_checkpoint_to_onnx.py

- https://github.com/d8ahazard/sd_dreambooth_extension/blob/main/dreambooth/sd_to_diff.py

Those parts have their own license with additional restrictions and may need permission for commercial usage.

Getting this set up and running on AMD would not have been possible without guides by:

- https://gist.github.com/harishanand95/75f4515e6187a6aa3261af6ac6f61269

- https://gist.github.com/averad/256c507baa3dcc9464203dc14610d674

- https://github.com/AUTOMATIC1111/stable-diffusion-webui/wiki/Install-and-Run-on-AMD-GPUs

- https://www.travelneil.com/stable-diffusion-updates.html

There are many other good options for using Stable Diffusion with hardware acceleration, including: